Dropping SMPTE LTC for the project

Well, having learned from a friend of mine who's doing some editing

on Final Cut Pro (FCP) how he's syncing, video, I decided to change

the principle of this project.

The functionality of Plural Eyes (using two soundtracks of the same scene to synchronize video tracks) is now folded into numerous editing programs: Adobe Premiere Pro CC; Apple FCP; kdenlive; DaVinci Resolve and Avid Media Composer (absent from this list is EditShare Lightworks). All have some kind of audio multicam syncing and/or waveform analysis.

So SMPTE time code isn't required nowadays to automate track syncing: audio analysis can do the job (will miss those Drop vs Non-Drop forum threads, though...). Sure, waveform analysis implementations and algorithms vary among vendors (ideally putting the syncing process in the background, or even using GPU acceleration) but this seems to work well and is adopted by users communities. So there you go: no need anymore to build an Arduino based GPS disciplined UTC time of day linear time code generator!

Waveform analysis is all good, but, but... What if one of your audio camera is bad? What if a camera is too far from the scene and can't even pick up sound, such as a drone? Multicam shooting (and multi recorders) need a single broadcast audio signal of high quality to permit syncing. Why not using the FM band? WHY NOT USING A FM STATION PROGRAM as a scratch audio track for each device? Mwah ha ha! [evil laugh]

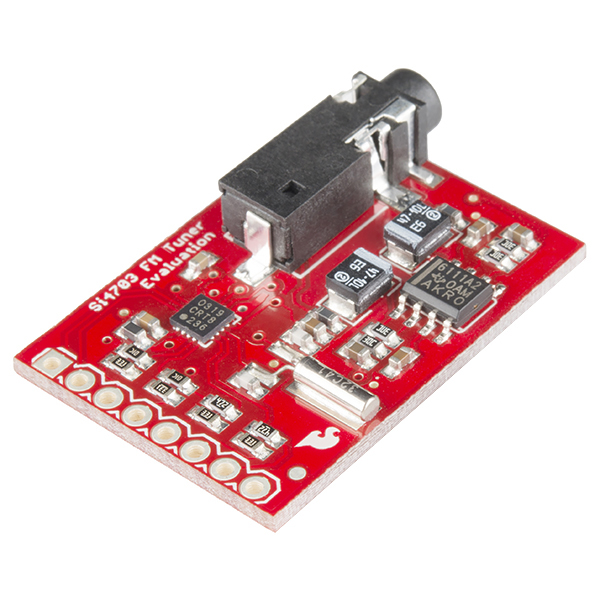

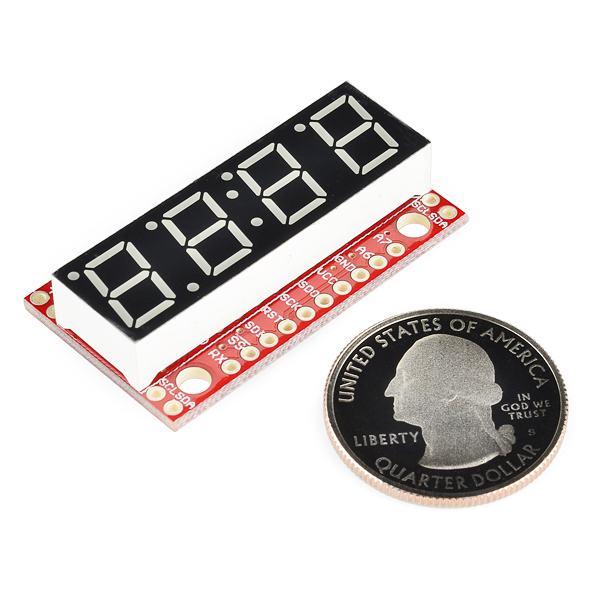

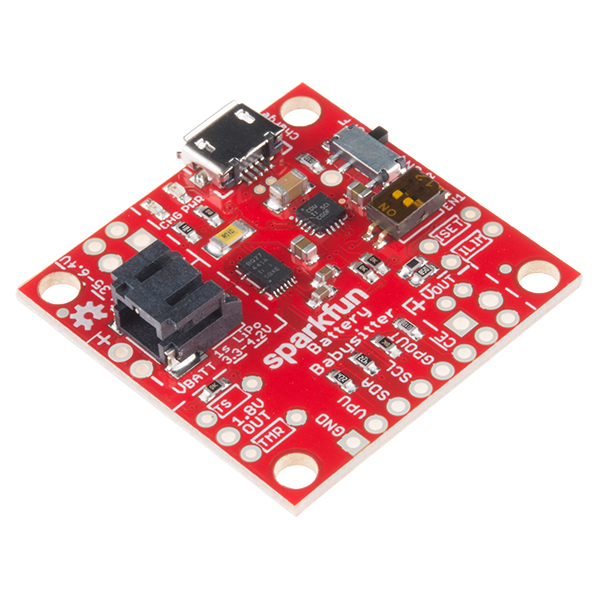

Enter the SyncFM gizmo:

The functionality of Plural Eyes (using two soundtracks of the same scene to synchronize video tracks) is now folded into numerous editing programs: Adobe Premiere Pro CC; Apple FCP; kdenlive; DaVinci Resolve and Avid Media Composer (absent from this list is EditShare Lightworks). All have some kind of audio multicam syncing and/or waveform analysis.

So SMPTE time code isn't required nowadays to automate track syncing: audio analysis can do the job (will miss those Drop vs Non-Drop forum threads, though...). Sure, waveform analysis implementations and algorithms vary among vendors (ideally putting the syncing process in the background, or even using GPU acceleration) but this seems to work well and is adopted by users communities. So there you go: no need anymore to build an Arduino based GPS disciplined UTC time of day linear time code generator!

Waveform analysis is all good, but, but... What if one of your audio camera is bad? What if a camera is too far from the scene and can't even pick up sound, such as a drone? Multicam shooting (and multi recorders) need a single broadcast audio signal of high quality to permit syncing. Why not using the FM band? WHY NOT USING A FM STATION PROGRAM as a scratch audio track for each device? Mwah ha ha! [evil laugh]

Enter the SyncFM gizmo:

+

+

Hi Raymond.

ReplyDeleteI still see your project interesting.

A Tentacle system is like 400 bucks each.

I consider that a cheap option should be done.

well, I just found this: http://www.qrp-labs.com/progrock.html a gps disciplined clock, it would greatly simplify the timecode generation.

DeleteThis comment has been removed by the author.

Deletetwo years later... still at the drawing board... and still no open hardware alternative to tentacle... sigh (I got the progrock somewhere on my bench)

Delete